Multi-GPU OpenGL on OSX with GLFW3.3

At the Oxford Robotics Institute (ORI) we frequently use OpenGL (as well as OpenCL) for compute and good performance across different hardware (NVidia / AMD / Intel) and operating systems (OSX / Linux). But its not so easy to target different GPU’s with OpenGL to split the load across a machine which is obviously preferential for performance.

For example here’s one of our OpenGL apps calculating disparity which runs at ~30Hz. As on one of our vehicles we have 10 stereo camera pairs, before running anything else, we want to be able to distribute this load optimally so each disparity app can run in real-time.

Terminology

Its worth clarifying here that I am going to use the term renderer to describe any OpenGL capable device which, on modern OSX devices, normally involves a combination of: dedicated GPU’s (i.e. AMD / NVidia and / or Intel HD acceleration) and Apple provided software rendering. This is actually the decision we want to make when we are launching an OpenGL process.

Problem

Targeting OpenGL renderer’s is normally done by attaching the OpenGL window to a particular display. On Linux this is somewhat more straight forward than on OSX. On OSX you might attach a dummy HDMI connector to each renderer to create a display (e.g. this). But that doesn’t help if :

You want to target a particular GPU in a Multi-GPU setup for load balancing without these devices. Mot all renderer’s are exposed to connect a display to (e.g. the second GPU in a Mac Pro or Intel HD where there also exists a dedicated GPU).

Setup

Hardware wise we are going to work with a Mac Pro with dual AMD FirePro D700 and a MacBook Pro with AMD Radeon R9 M370X and Intel Iris Pro.

Software wise we using Core OpenGL and GLFW v3.3 (note: this is pre release. You can achieve the same results with v3.2 but its a lot messier…).

Solution

The solution was found in a WWDC talk from 2014 where in summary you setup OpenGL to allow offline renderers and then choose the renderer you want. If you only want to specifically select a virtual screen number you can drop most of the code. However if you don’t know that ahead of time you can scan the renderers like shown to find the renderer that is both accelerated (i.e. GPU) and offline (not the main GPU) and then use that to select the virtual screen instead.

So here it is:

// Note: I’ve left in a lot of information gathering about the OpenGL Renderers that I thought

// might be helpful to those looking and can be deleted if you want more optimal code.

// For example if you dont know the virtual screen no. up front you could cycle through the

// renderers to find the desired virtual screen, checking they are:

// i) - accelerated (i.e. GPU)

// ii) - offline (renderers not connected to a display, i.e. second GPU on Mac Pro)

#include <iostream>

#include <iomanip>

#include <glog/logging.h>

#include <GL/glew.h>

#include <GLFW/glfw3.h>

#include <OpenGL/OpenGL.h>

DEFINE_int32(glfw_context_version_major, 3, "GLFW major context version");

DEFINE_int32(glfw_context_version_minor, 2, "GLFW minor context version");

DEFINE_int32(virtual_screen, -1,

"Use a specific virtual screeen which is currently believed to be "

"a stable identifier. -1 == default. On Mac Pro: 0 == Secondary "

"GPU. 1 == Primary GPU. 2 == Software.");

// Struct to hold renderer info

struct GLRendererInfo {

GLint rendererID; // RendererID number

GLint accelerated; // Whether Hardware accelerated

GLint online; // Whether renderer (/GPU) is onilne. Second GPU on Mac Pro is offline

GLint virtualScreen; // Virtual screen number

GLint videoMemoryMB;

GLint textureMemoryMB;

const GLubyte *vendor;

};

GLFWwindow *InitialiseOpenGLContext(const unsigned int &width,

const unsigned int &height,

const std::string &name) {

// Initialise GLFW context

glfwSetErrorCallback(glfw::GLFWErrorCallback);

LOG_IF(FATAL, !glfwInit()) << "GLFW failed to initialise";

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR,

FLAGS_glfw_context_version_major);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR,

FLAGS_glfw_context_version_minor);

glfwWindowHint(GLFW_OPENGL_FORWARD_COMPAT, GL_TRUE);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

glfwWindowHint(GLFW_SAMPLES, 0);

glfwWindowHint(GLFW_VISIBLE, GL_FALSE);

glfwWindowHint(GLFW_REFRESH_RATE, GLFW_DONT_CARE);

// This is so all OpenGL Renderers are visible (GPUs / Software)

glfwWindowHint(GLFW_COCOA_GRAPHICS_SWITCHING, GL_TRUE);

// Initialise GLFW window

GLFWwindow *window = glfwCreateWindow(width, height, name.c_str(), NULL, NULL);

LOG_IF(FATAL, !window) << "GLFW failed to create window";

glfwMakeContextCurrent(window);

// Initialise GLEW

glewExperimental = GL_TRUE;

LOG_IF(FATAL, glewInit() != GLEW_OK) << "GLEW failed to initialise";

glGetError();

// Code to find and swap the OpenGL context to the desired virtual screen.

// We use -1 to use GLFW default

if (FLAGS_virtual_screen < 0) {

LOG(INFO) << "OpenGL Renderer: Using GLFW default.";

} else {

LOG(INFO) << "OpenGL Renderer: Using selected virtual screen: " << FLAGS_virtual_screen;

// Grab the GLFW context and pixel format for future calls

CGLContextObj contextObject = CGLGetCurrentContext();

CGLPixelFormatObj pixel_format = CGLGetPixelFormat(contextObject);

// Number of renderers

CGLRendererInfoObj rend;

GLint nRenderers = 0;

CGLQueryRendererInfo(0xffffffff, &rend, &nRenderers);

// Number of virtual screens

GLint nVirtualScreens = 0;

CGLDescribePixelFormat(pixel_format, 0, kCGLPFAVirtualScreenCount, &nVirtualScreens);

// Get renderer information

std::vector<GLRendererInfo> Renderers(nRenderers);

for (GLint i = 0; i < nRenderers; ++i) {

CGLDescribeRenderer(rend, i, kCGLRPOnline, &(Renderers[i].online));

CGLDescribeRenderer(rend, i, kCGLRPAcceleratedCompute, &(Renderers[i].accelerated));

CGLDescribeRenderer(rend, i, kCGLRPRendererID, &(Renderers[i].rendererID));

CGLDescribeRenderer(rend, i, kCGLRPVideoMemoryMegabytes, &(Renderers[i].videoMemoryMB));

CGLDescribeRenderer(rend, i, kCGLRPTextureMemoryMegabytes, &(Renderers[i].textureMemoryMB));

}

// Get corresponding virtual screen

for (GLint i = 0; i != nVirtualScreens; ++i) {

CGLSetVirtualScreen(contextObject, i);

GLint r;

CGLGetParameter(contextObject, kCGLCPCurrentRendererID, &r);

for (GLint j = 0; j < nRenderers; ++j) {

if (Renderers[j].rendererID == r) {

Renderers[j].virtualScreen = i;

Renderers[j].vendor = glGetString(GL_VENDOR);

}

}

}

// Print out information of renderers

VLOG(1) << "No. renderers: " << nRenderers

<< " No. virtual screens: " << nVirtualScreens << "\n";

for (GLint i = 0; i < nRenderers; ++i) {

VLOG(1) << "Renderer: " << i

<< " Virtual Screen: " << Renderers[i].virtualScreen

<< " Renderer ID: " << Renderers[i].rendererID

<< " Vendor: " << Renderers[i].vendor

<< " Accelerated: " << Renderers[i].accelerated

<< " Online: " << Renderers[i].online

<< " Video Memory MB: " << Renderers[i].videoMemoryMB

<< " Texture Memory MB: " << Renderers[i].textureMemoryMB << "\n";

}

// Set the context to our desired virtual screen (and therefore OpenGL renderer)

bool found = false;

for (GLint i = 0; i < nRenderers; ++i) {

if (Renderers[i].virtualScreen == FLAGS_virtual_screen) {

CGLSetVirtualScreen(contextObject, Renderers[i].virtualScreen);

found = true;

}

}

LOG_IF(FATAL, !found) << "Cound not find requested virtual screen: "

<< FLAGS_virtual_screen;

}

// set default color value to zero

glClearColor(0, 0, 0, 0);

// clear initialisation errors

(void)glGetError();

// return pointer to glfw window

return window;

}

And thats it!

Results

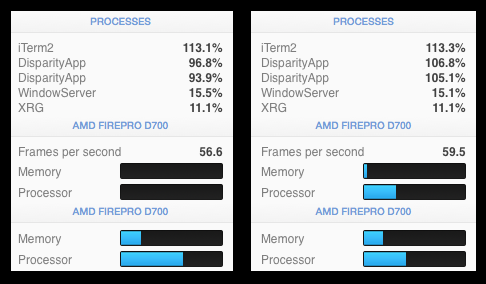

To check that its working we ran two instances of the previously mentioned DisparityApp at the same time on the Mac Pro. First on the same AMD card and then on separate cards. We used iStat Menus to monitor the GPU usage and you can see that for the case on the left all the load is on the second AMD GPU, whereas on the right the load is split across the GPUs.

iStat Menus GPU usage of both OpenGL instances on one GPU (left) and split across theGPUs (right) A single instance of the DisparityApp runs at around 30Hz (~30ms). As expected when putting both DisparityApp instances on a single GPU this drops to around 15Hz (~60ms) on the left above which is what we were doing previously. Now by targeting the relevant virtual screens to split the instances on different GPUs each runs at 30Hz on the right.

We also tested the code on a MacBook pro which gave us the option to target the OpenGL code on the Intel HD renderer (or our TitanXp eGPU) if you want to reserve the AMD GPU for another purpose.

Conclusion

The code above allows you to target OpenGL renderers on OSX allowing optimal performance and use of resources. With the addition of an eGPU we have, this opens the door for a lot more OpenGL throughput on our systems (coming in a future post).

If you have any questions with the above code (or any suggestions to improve it) let me know.