Driven to Distraction: Self-Supervised Distractor Learning for Robust Monocular Visual Odometry in Urban Environments

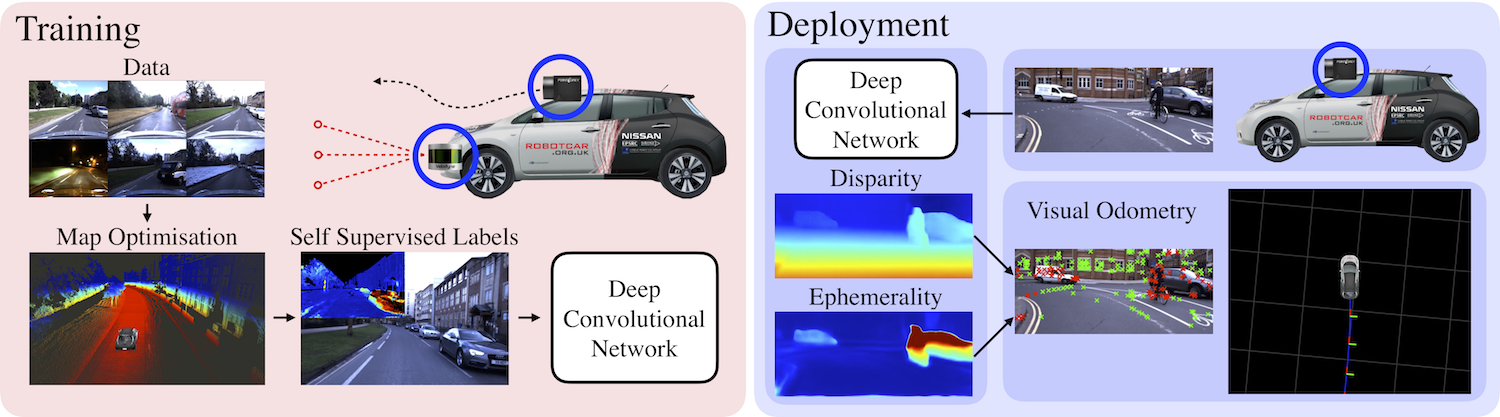

Abstract - We present a self-supervised approach to ignoring “distractors” in camera images for the purposes of robustly estimating vehicle motion in cluttered urban environments. We leverage offline multi-session mapping approaches to automatically generate a per-pixel ephemerality mask and depth map for each input image, which we use to train a deep convolutional network. At run-time we use the predicted ephemerality and depth as an input to a monocular visual odometry (VO) pipeline, using either sparse features or dense photometric matching. Our approach yields metric-scale VO using only a single camera and can recover the correct egomotion even when 90% of the image is obscured by dynamic, independently moving objects. We evaluate our robust VO methods on more than 400km of driving from the Oxford RobotCar Dataset and demonstrate reduced odometry drift and significantly improved egomotion estimation in the presence of large moving vehicles in urban traffic.

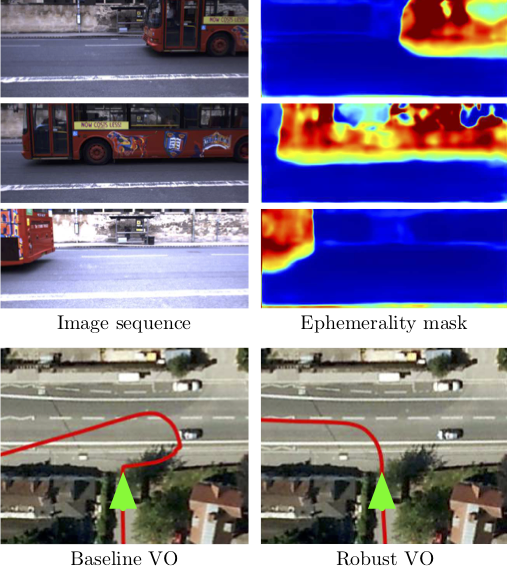

Example - In the figure below we show our robust motion estimation in urban environments using a single camera and a learned ephemerality mask. When making a left turn onto a main road, a large bus passes in front of the vehicle (green arrow) obscuring the view of the scene (top left). Our learned ephemerality mask correctly identifies the bus as an unreliable region of the image for the purposes of motion estimation (top right). Traditional visual odometry (VO) approaches will incorrectly estimate a strong translational motion to the right due to the dominant motion of the bus (bottom left), whereas our approach correctly recovers the vehicle egomotion (bottom right).

Further Info - For more experimental details please use the following links, watch the project video below, or drop me an email:

[Paper] [Video] [Poster1] [Poster2]

@inproceedings{

BarnesICRA2018,

address = {Brisbane},

author = {Barnes, Dan and Maddern, Will and Pascoe, Geoffrey and Posner, Ingmar},

title = {Driven to Distraction: Self-Supervised Distractor Learning for Robust Monocular Visual Odometry in Urban Environments},

booktitle = {Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)},

month = {May},

url = {https://arxiv.org/abs/1711.06623},

pdf = {https://arxiv.org/pdf/1711.06623.pdf},

year = {2018}

}