Masking by Moving: Learning Distraction-Free Radar Odometry from Pose Information

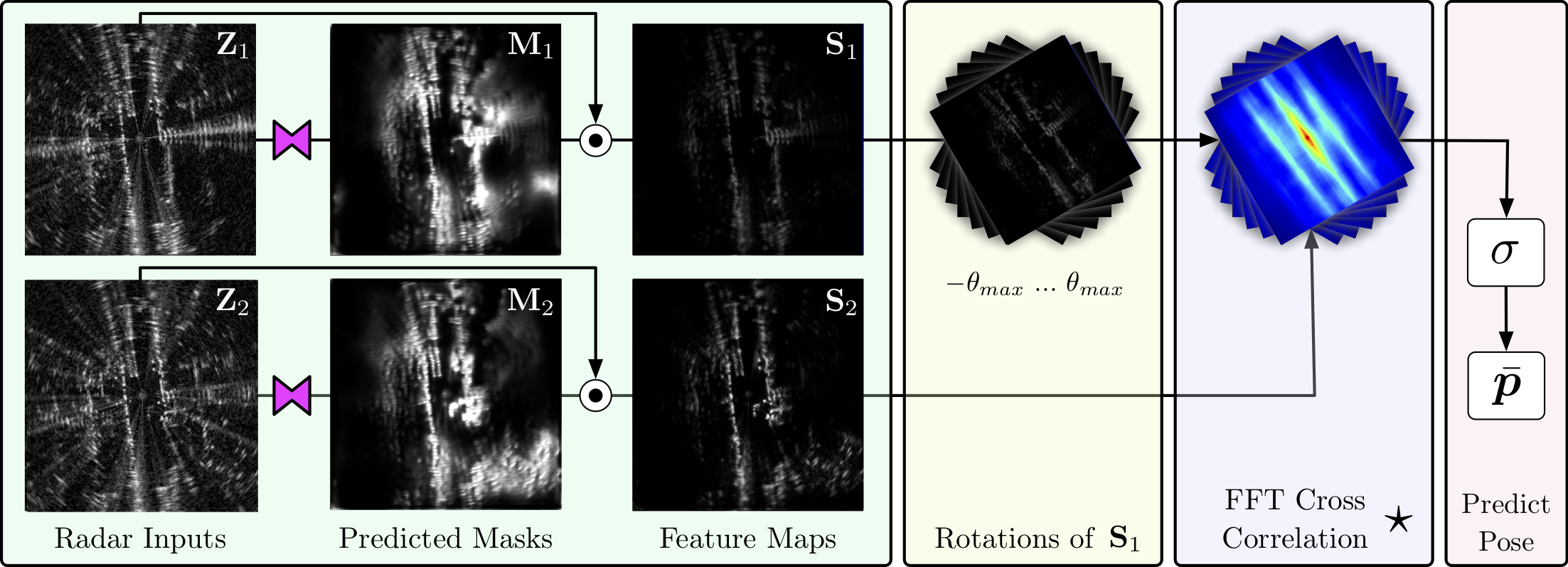

Abstract - This paper presents an end-to-end radar odometry system which delivers robust, real-time pose estimates based on a learned embedding space free of sensing artefacts and distractor objects. The system deploys a fully differentiable, correlation-based radar matching approach. This provides the same level of interpretability as established scan-matching methods and allows for a principled derivation of uncertainty estimates. The system is trained in a (self-)supervised way using only previously obtained pose information as a training signal. Using 280km of urban driving data, we demonstrate that our approach outperforms the previous state-of-the-art in radar odometry by reducing errors by up 68% whilst running an order of magnitude faster.

Data - For this paper we use our recently released Oxford Radar RobotCar Dataset.

Further Info - For more experimental details please use the following links, watch the project video below, or drop me an email:

[Paper] [Video] [Poster] [Dataset]

@inproceedings{Barnes2019MaskingByMoving,

author = {Dan Barnes and Rob Weston and Ingmar Posner},

title = {Masking by Moving: Learning Distraction-Free Radar Odometry from Pose Information},

booktitle = {{C}onference on {R}obot {L}earning ({CoRL})},

url = {https://arxiv.org/pdf/1909.03752},

pdf = {https://arxiv.org/pdf/1909.03752.pdf},

year = {2019}

}